NVIDIA GeForce RTX 4090

It’s been a little more than two years since the release of the GeForce RTX 3080 and RTX 3090. At the time, the RTX 3080 offered such incredible performance over the previous generation GeForce cards at a price that was very reasonable at $699, NVIDIA had a bonafide winner on it's hands. Of course, many things happened that made it hard to purchase the RTX 3080 for that price, scarce availability making it tough to get one in the wild.

At GTC 2022 a few weeks ago, NVIDIA announced their next generation Ada Lovelace cards in the GeForce RTX 4080 12GB, GeForce RTX 4080 16GB, and the GeForce RTX 4090 - with the RTX 4090 being the first one to be released. NVIDIA was very kind to send along the GeForce RTX 4090 to review and today we’ll go through what’s new and compare it to what I currently have on hand in the GeForce RTX 3080 Ti and the GeForce RTX 3090 Ti.

Spec wise, here’s how it stacks up to both the RTX 3090 Ti and RTX 3080 Ti:

|

GeForce RTX 4090 |

GeForce RTX 3090 Ti |

GeForce RTX 3080 Ti |

|

|

SM |

128 |

84 |

80 |

|

CUDA Cores |

16384 |

10752 |

10240 |

|

Tensor Cores |

512 (4th gen) |

336 (3rd gen) |

320 (3rd gen) |

|

RT Cores |

128 (3rd gen) |

84 (2nd gen) |

80 (2nd gen) |

|

Texture Units |

512 |

336 |

320 |

|

ROPs |

176 |

112 |

112 |

|

Base Clock |

2235 MHz |

1560 Mhz |

1365 MHz |

|

Boost Clock |

2520 MHz |

1860 MHz |

1665 MHz |

|

Memory Clock |

10501 MHz |

9500 MHz |

9500 MHz |

As you can see, the RTX 4090 has some solid increases across the board over the RTX 3090 Ti. SM is increased by 44 over the previous top end card and these SM deliver up to 2X performance with better efficiency. The RTX 4090 gets a whopping 5062 more CUDA cores than the RTX 3090 Ti. Tensor cores are increased by 176 as well as some architectural changes to make it a third generation tensor core. RT cores are increased by 44 and they too get a generational leap over the 3090 TI.

About the only thing staying the same is data rate, and amount with 24GB of GDDR6X memory. The memory interface also stays at 384-bit giving the card a total memory bandwidth of 1008 GB/s. But everything else gets a nice update along with an increase in memory clock speed to 10501 MHz.

Whereas the RTX 3090 Ti card was made utilizing Samsung’s 8nm process, NVIDIA went back to TSMC on the RTX 4090 and it’s fabricated under a 4nm process, or half of Samsung’s. In theory, the smaller process will produce more chips in addition to increasing the speed and efficiency of them.

The GeForce RTX 4090 Founder’s Edition is a beefy card coming in a three slot configuration like the GeForce RTX 3090 and the GeForce RTX 3090 Ti. In fact, the card’s dimensions are really, really close to the GeForce RTX 3090 and RTX 3090 Ti. The length of the RTX 4090 comes in at 304mm which makes it shorter than the two RTX 3090 cards which are 313mm in length. You can see the RTX 4090 is slightly wider than the RTX 3090 Ti, but it’s not that much, and the card still fits in the three slot configuration pretty easily.

Among the physical improvements are an increased size in both fans. The increased size will help improve the amount of air that gets pushed and pulled through the fins to help cool the card down better. NVIDIA says there’s a 20% improvement in air flow. Speaking of the fins, the volume of them has increased as well. Combined with the larger, improved fans, the two should help improve the thermal cooling performance of the card.

On top of the card sits the 16-pin 12VHPWR power connector that was also in the RTX 3090 Ti. This connector conforms with the new ATX 3.0 standard with the four extra pins helping communicate to the power supply. Now, you don’t need to have one of these new power supplies to run the GeForce RTX 4090. Using the included four 6-pin to 12VHPWR dongle worked just fine in my testing. Also, you don’t have to worry about it catching on fire, as those reports you might have seen were shown to have been done in extreme circumstances that pretty much everyone won’t be experiencing. But if you do have one of the new ATX 3.0 power supplies, cable management will be a lot easier as you’ll only need one 16-pin cable running from it to the GeForce RTX 4090. The RTX 3090 Ti came with a dongle that went from three 6-pin connectors to the 12VHPWR connector but didn’t have the four extra pins underneath.

Speaking of power, rumors were that the TGP of the Geforce RTX 4090 was going to be somewhere in the 600W range, which would have been pretty crazy. Thankfully, the TGP of the RTX 4090 is officially 450W, same as the GeForce RTX 3090 Ti. That’s still some good power and it’s recommended that you have a power supply capable of at least 850W just to be on the safe side. But for those thinking they’d have to spend for a 1000W or above power supply, you should be good to go if you have an 850W or above. It doesn’t mean it can’t go up to the 600W range and I’m guessing some AIB’s will push it as far as it can go with the large coolers that are attached to it. In the end, the power requirements are the same as the card launched in March, and you won’t need to get an ATX 3.0 power supply to run it. But there’s something that’s kind of interesting about power that we’ll get to later on in this review.

I/O is the same with three DisplayPorts 1.4a and one HDMI 2.1 port. If I remember correctly, the reason that the GeForce RTX 4090 wouldn’t have DisplayPort 2.0 was that the card was finalized before that standard was, so the card didn’t include DisplayPort 2.0, and they are also not saturating the bandwidth for 1.4a. Either way, I don’t think it’s that big of a deal for the RTX 4090 to be shipped without DisplayPort 2.0, and it’ll probably be better served on future cards from NVIDIA. The bracket is a true three slot bracket so hopefully this helps keep the cards in place unlike some AIBs with three slot cards but only putting on a two slot bracket.

A few new things were being showcased from NVIDIA besides the RTX 4090 that are going to be coming or available to give gamers some new tools to play with. Let’s talk about their biggest touchpoint with the Ada Lovelace cards, DLSS 3.

DLSS 2 has been pure magic and offers, in most situations, a nice performance uplift at similar or improved image quality. DLSS 2 works wonders and I think it’s one of NVIDIA’s best techs to improve visual quality and performance.

DLSS 3 introduces a few improvements and frame generation. On a top level, it will generate frames using AI, motion vectors from the game, and a few other inputs to offer better frames per second. DLSS 3 will insert a generated frame in between game created frames, but at a small cost of latency. To combat this, DLSS 3 relies on another tech, NVIDIA Reflex, to reduce the small latency that frame generation introduces. Frame generation is something NVIDIA has been researching even before DLSS Super Resolution, so it took them a while to get it to a point where they feel it’s ready for mass consumption.

Some people have feared that when using frame generation, while it may increase FPS, gameplay might feel more laggy. Well, I am happy to report this doesn’t seem to be the case in Microsoft Flight Simulator and Cyberpunk 2077. Although both games aren’t in the competitive gaming space, both benefit from DLSS 3 frame generation without harming the gaming experience.

Having played in a lot of VR, I’ve come across this similar thing with Meta Quest’s Asynchronous Space Warp. That was also used to generate frames to smooth out performance. There were other things that helped improve picture quality and deal with latency in that tech, and from my experience it worked really well in a lot of titles. Trust me, you don’t want any latency or a lot of visual anomalies in VR or you’ll get sick pretty easily, so while it seemed a lot of people were apprehensive when NVIDIA announced their frame generation tech in DLSS 3, I wasn’t that worried it would affect how a game felt when playing with it on.

DLSS 3 is a pretty big thing for NVIDIA since currently, only Ada Lovelace cards have the hardware that’s capable of it. Sorry to those that own the previous generation of cards and want to experience DLSS 3. That’s not to say it won’t come to the 3000 series of cards in the future, but for now it’s going to be only on the 4000 series of cards from NVIDIA.

Let’s start off though with the basic tests of a few games. My system consists of:

AMD Ryzen 9 5950X

32GB DDR4 3600 Team T-Force XTREEM RAM

MSI MAG X570 Tomahawk WiFi

Sabrent 1TB Rocket NVMe 4.0 Gen4 PCIe M.2

Samsung 970 EVO Plus SSD 2TB M.2 NVMe

LG C2 OLED 4K TV

521.90 NVIDIA drivers

The only thing that’s changed since my last video card review is that I now have a 4K 120Hz display that’s GSYNC compatible. And there’s a reason I’ve waited this long to upgrade to 4K 120Hz. I’ve been waiting for a card to really handle 4K 120Hz and I think from the scores you’ll see the GeForce RTX 4090 is more than capable of it.

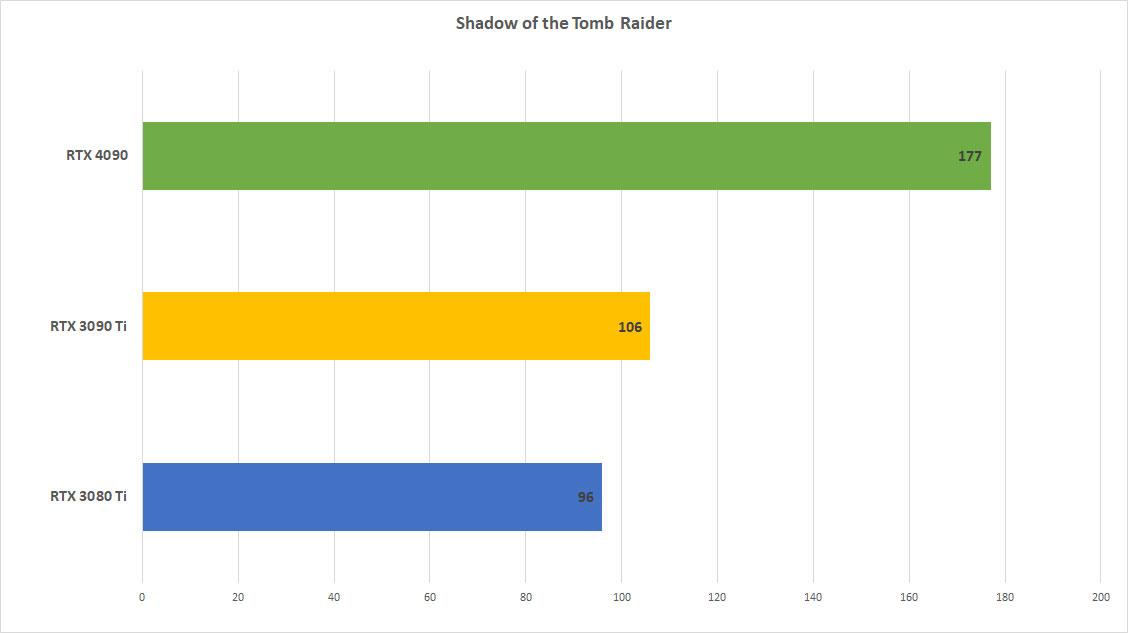

All tests were done at 4K and at the highest settings possible except when noted. Tests were run at least three times and then the scores were averaged out. Let’s start with some titles running in straight rasterization mode. Whenever possible, I used the built-in benchmark and took its scores. Shadow of the Tomb Raider was one title that always produced the same score no matter how many times I ran it for that particular card, hence the whole number in the averages.

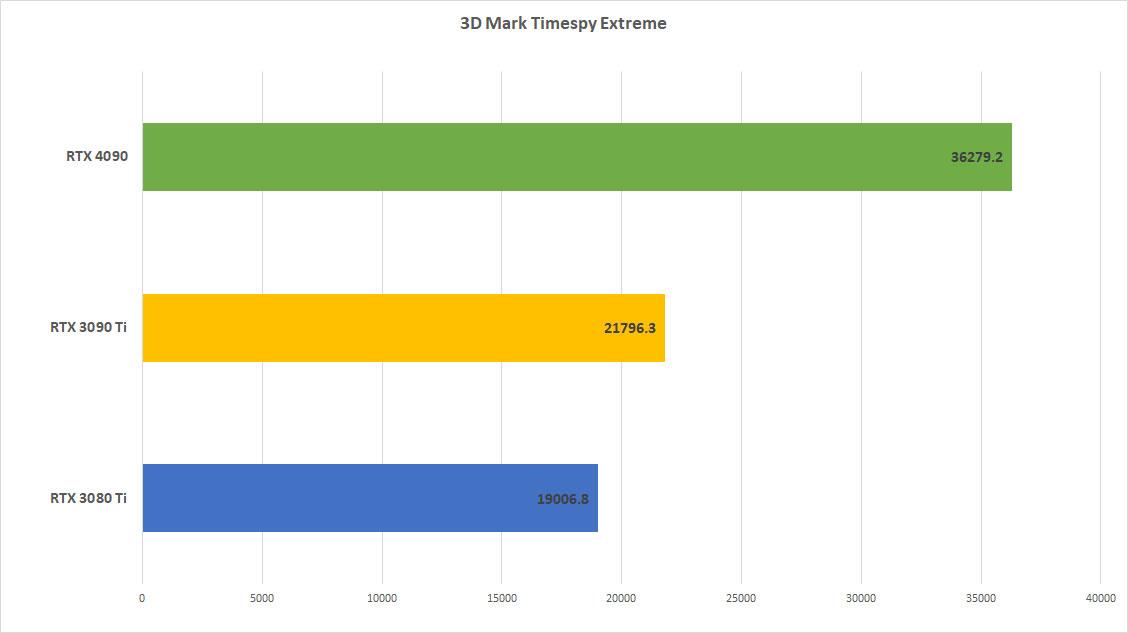

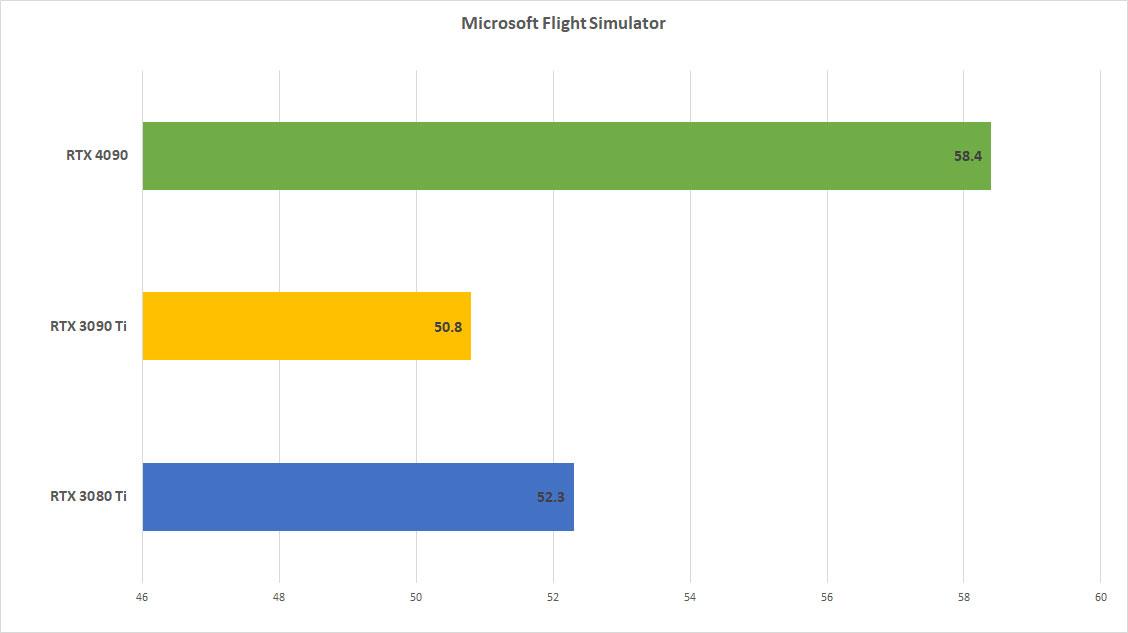

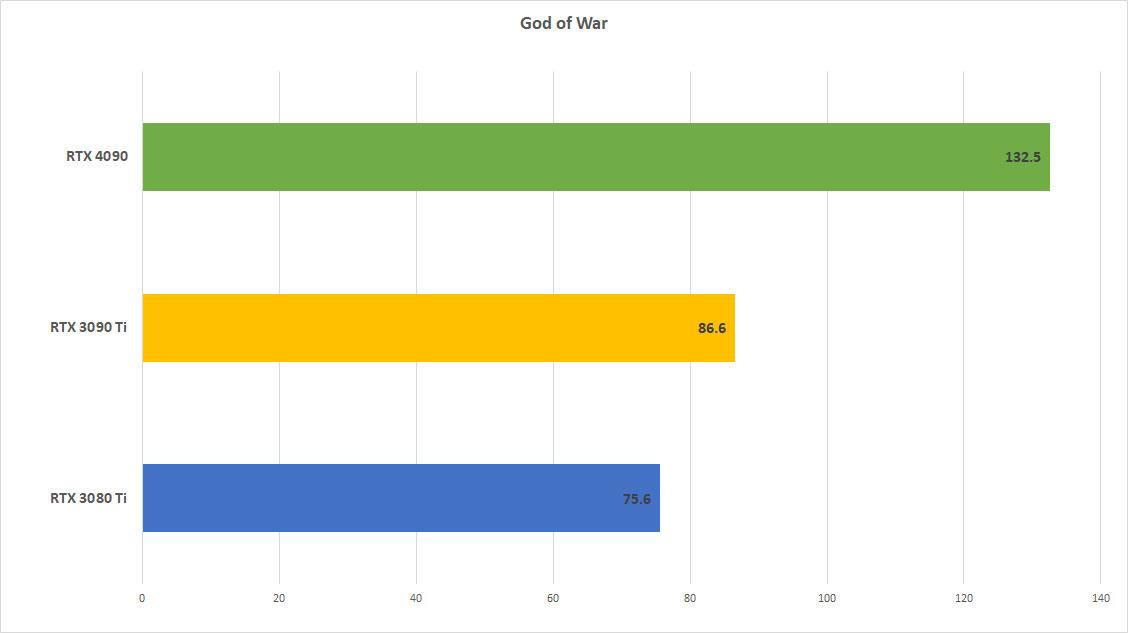

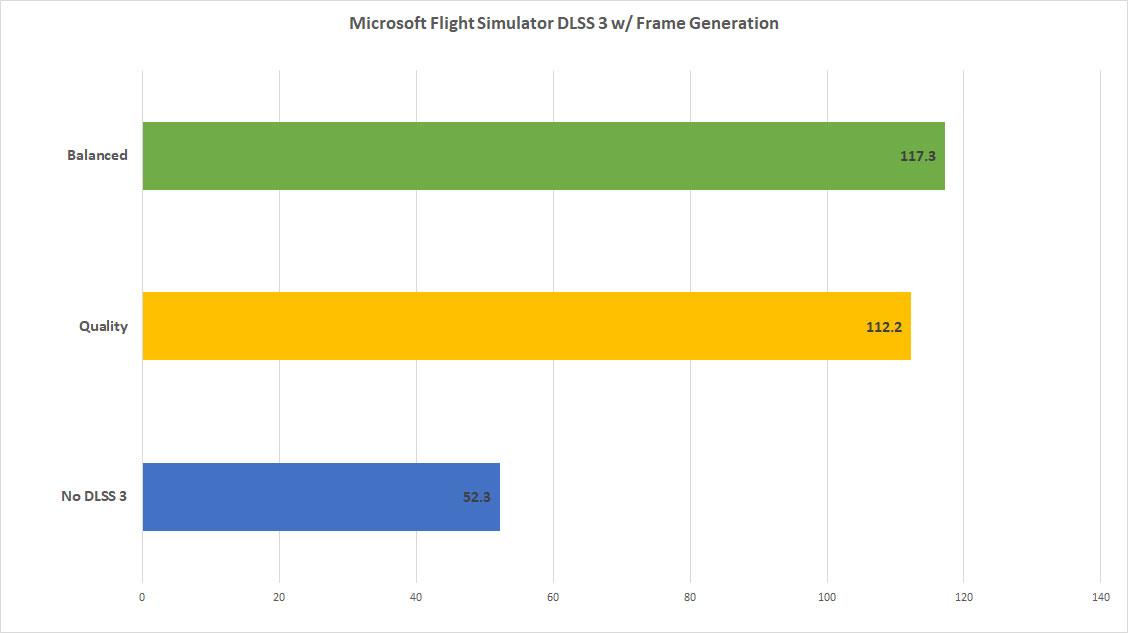

Just on pure performance alone without any DLSS involved, the GeForce RTX 4090 outperforms the GeForce RTX 3090 Ti in Control by 52.2%, Cyberpunk 2077 by 40.7%, God of War by 53%, Red Dead Redemption 2 by 48.9%, and Shadow of the Tomb Raider by 67%. There weren’t as many gains in a few other titles. Marvel’s Spider-Man got only 16.4% increase over the RTX 3090 Ti, and there were minor improvements in Microsoft Flight Simulator because that’s more of a CPU heavy game. But more on Microsoft Flight Simulator in the DLSS 3 portion of this review.

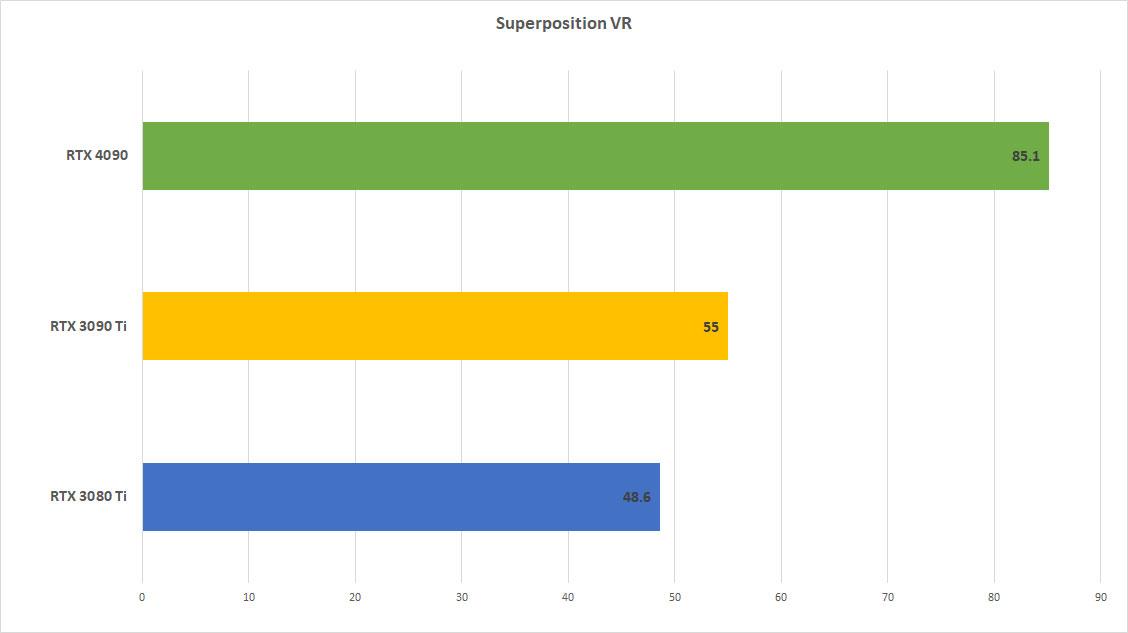

On the VR front, I tried using OpenVR Benchmark but for some reason the scores of the RTX 4090 were inconsistent with what I thought it should be getting. Also, the benchmark was stuttering pretty badly at times, so we’ll see if it gets updated once the RTX 4090 is available for a good bit of time for the author to test with. That benchmark hasn’t been updated in a while though, so I moved on to SuperPosition from Unigen, which has a VR testing setting. It will split the image into two pictures and run through 17 scenes allowing you to set the resolution for each eye as well as the quality of the VR image. I chose the resolution of the HP Reverb 2 which sits at 2160x2160 per eye and ran through the tests with all three cards.

The RTX 4090 comes at a 54.7% increase over the 3090 Ti in a simulated VR mode, and a whopping 75.1% increase over the card I mostly used the past year in the RTX 3080 Ti. To make sure things ran well, I ran through a few of my games in my library with the performance meter on and saw no red lines indicating dropped frames and I didn’t notice any hitches or stutter. The card performed incredibly well with my Valve Index connected.

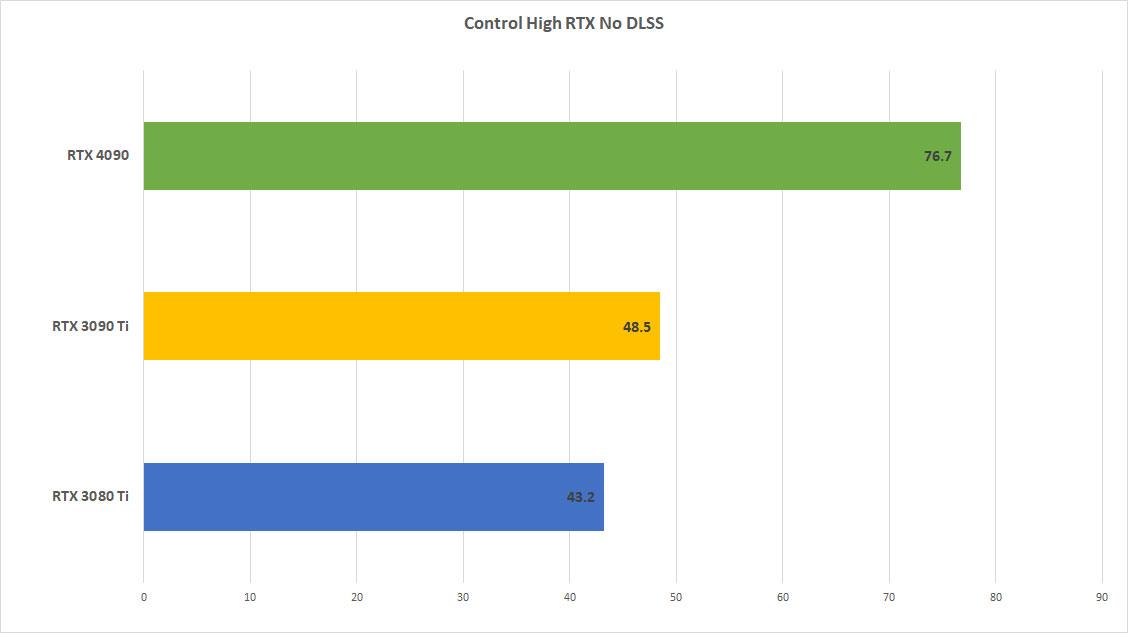

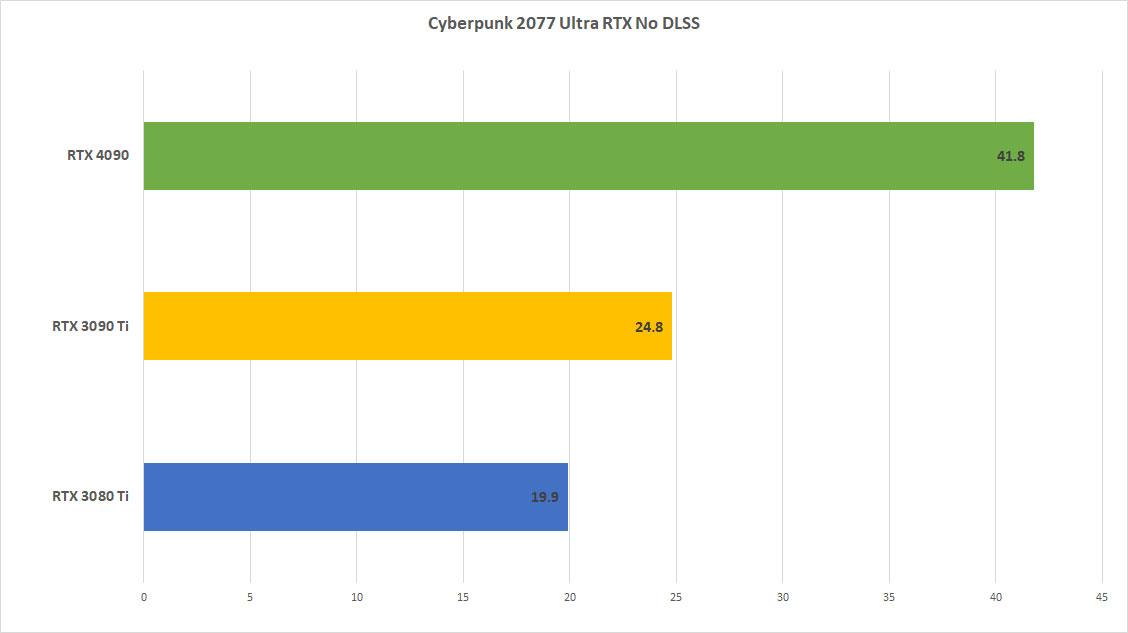

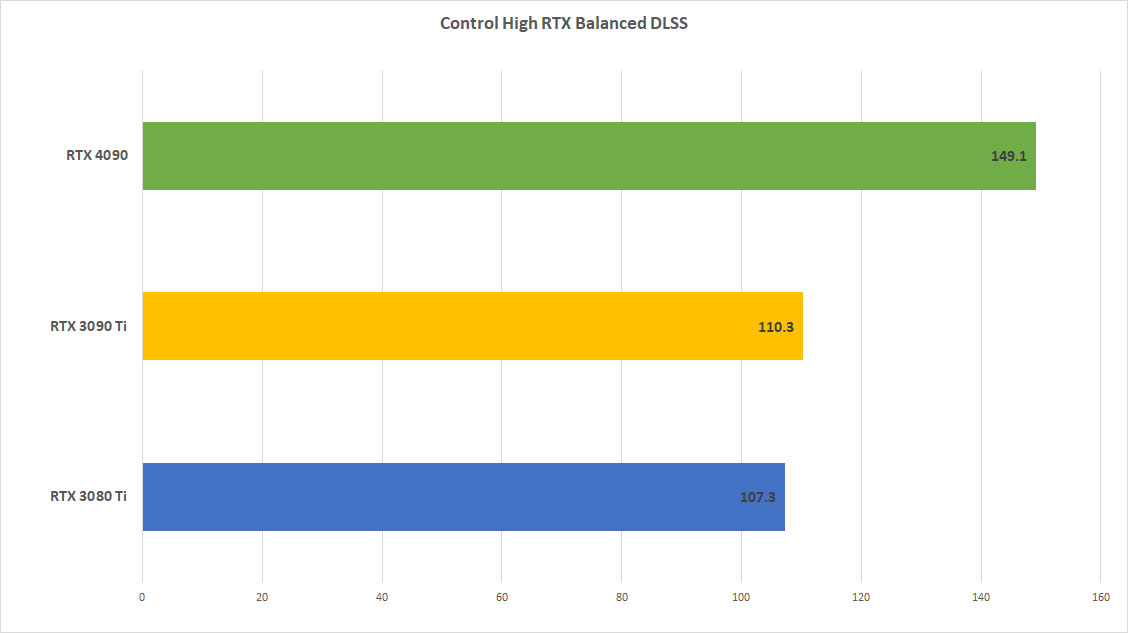

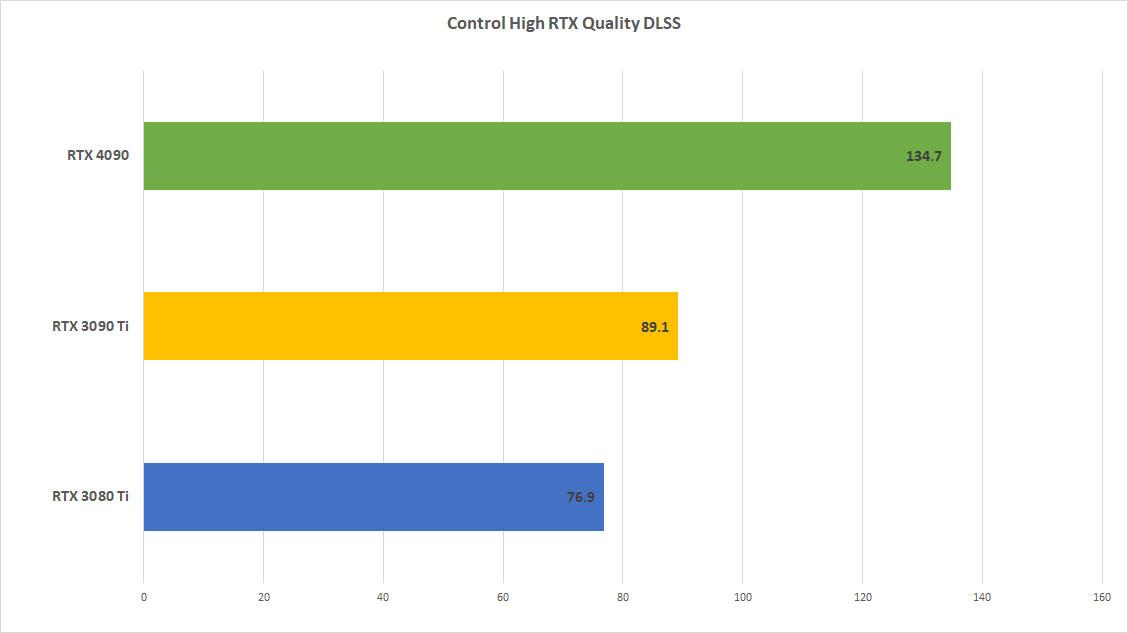

Moving on to RTX, here are the numbers when enabling ray tracing in various games. Not all games use every RTX feature; some just use a subset of those available. When there were options, I turned on everything to the maximum except for Cyberpunk 2077. Rather than using Psycho RTX on that one, I stuck with Ultra.

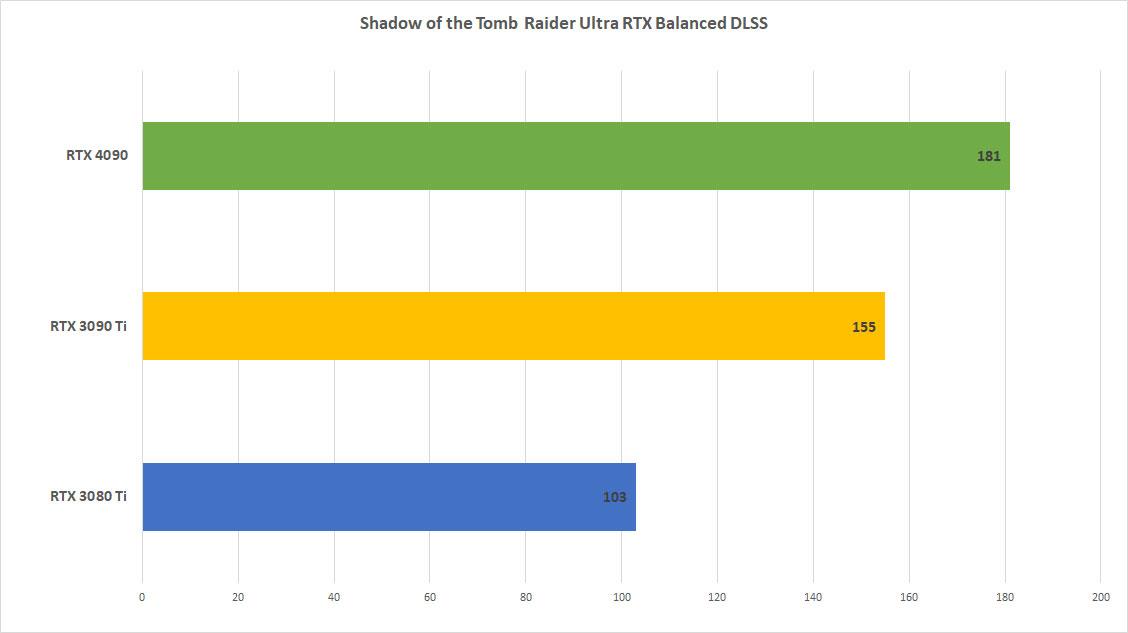

Again the GeForce RTX 4090 starts to really produce some great averages without the need for DLSS with RTX on. Control was above the 60 FPS range coming in at 76.7 while Shadow of the Tomb Raider had a whopping 122 average using the built in benchmark.

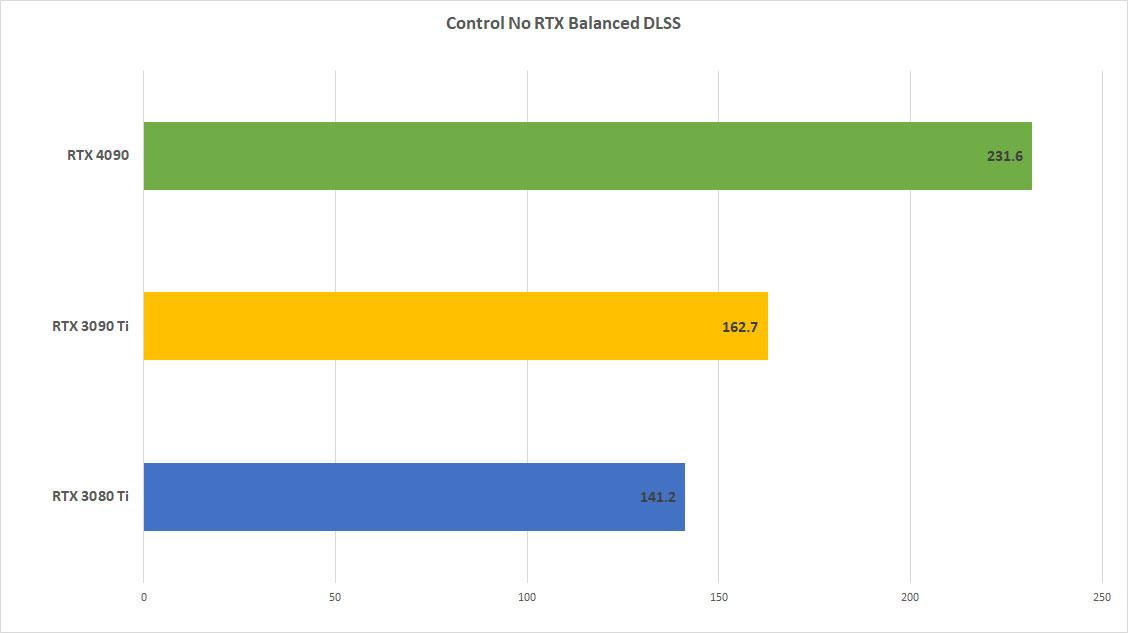

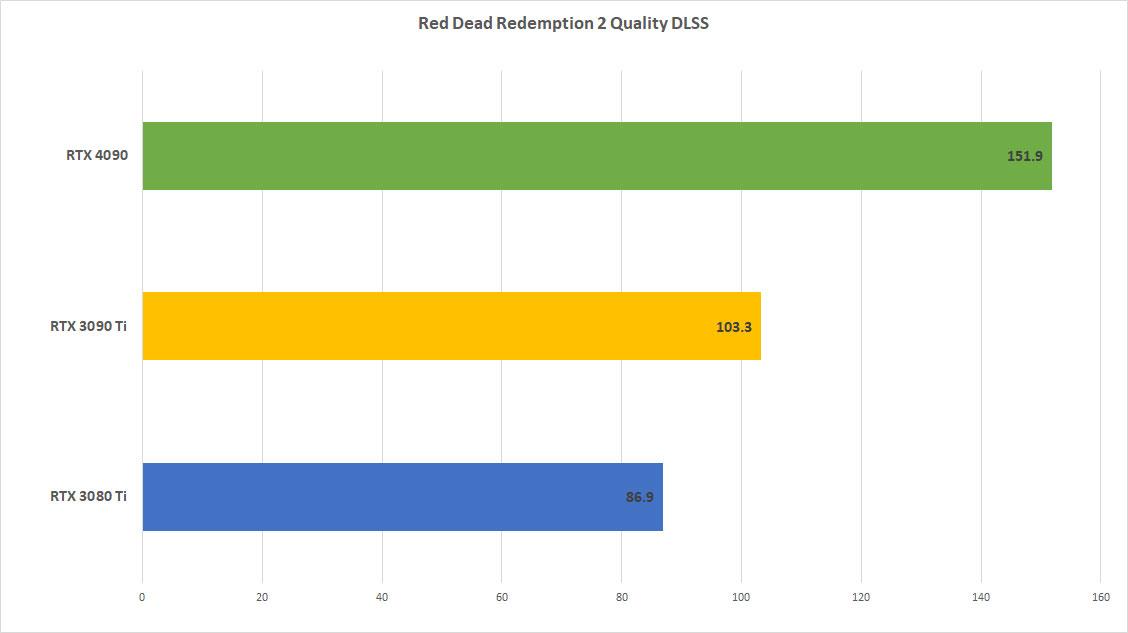

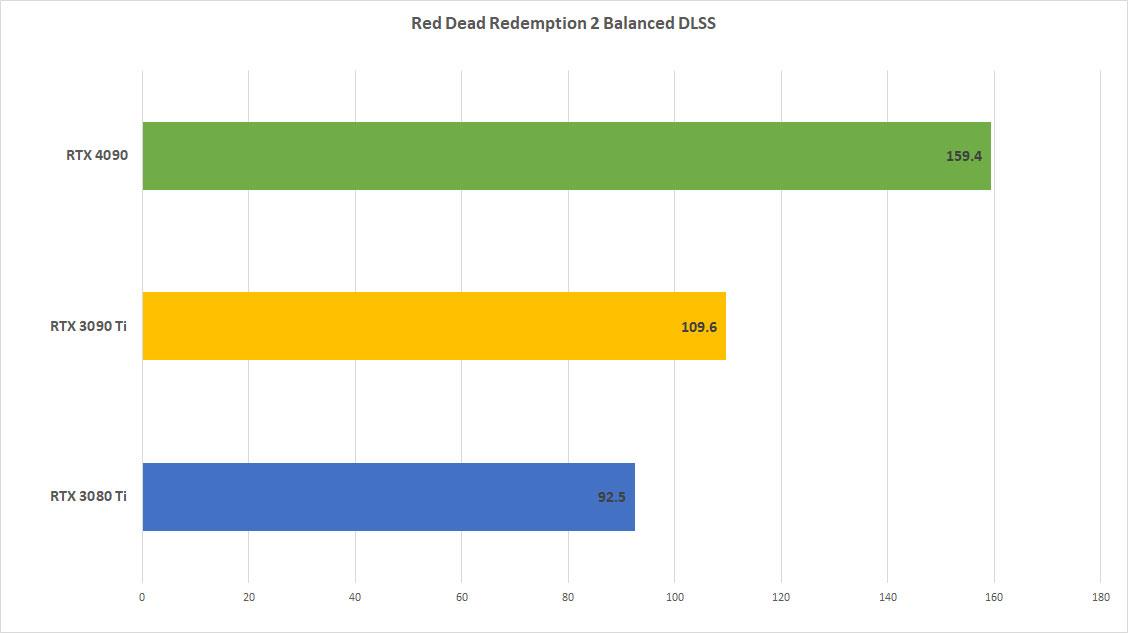

I ran DLSS 2 in both Quality and Balanced mode while omitting Performance mode. For me, Performance mode almost never produced a good enough image quality and I found more often than not, the picture was a tad bit fuzzy. Quality and Balanced produced the best images in my opinion, and those are the modes I recommend. Let’s take a screenshot from Control as an example. Looking at the three, you can see the edge of the file cabinet start to become dots rather than a straight line as you go from Quality to Balanced. The picture frame also becomes less accurate and the picture inside becomes fuzzier. Not all games are going to react the same with the various DLSS settings, but for the most part this is what I experience and why I usually stick with Quality or Balanced.

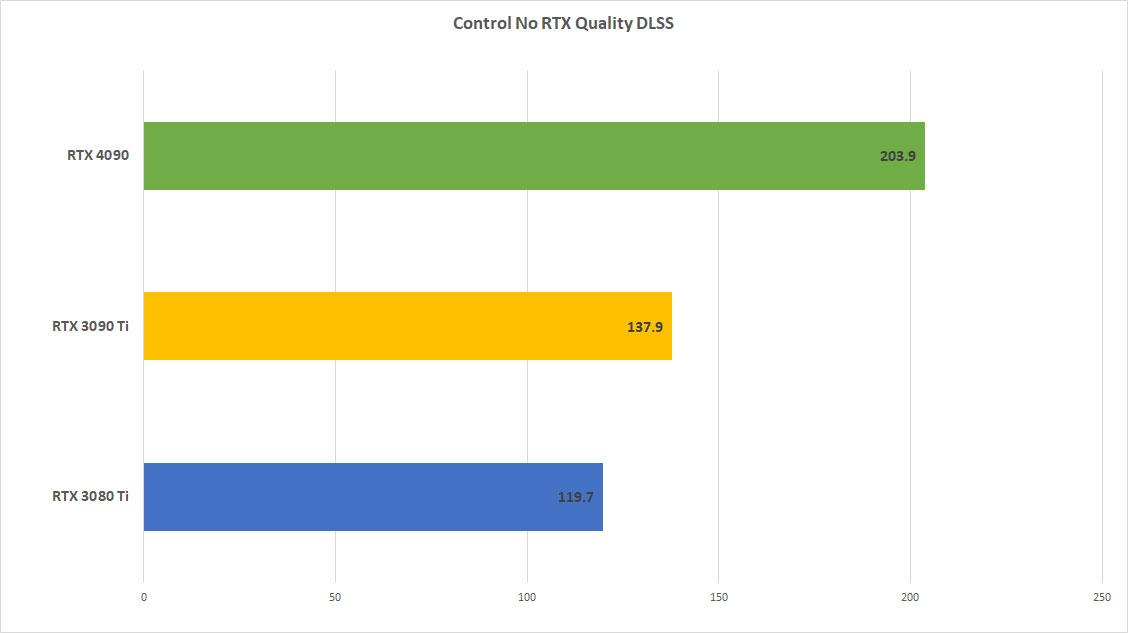

Here are scores using DLSS 2 with and without RTX enabled in some titles.

As you can see, titles other than Microsoft Flight Simulator (which I didn't include because the scores were very much minimal gains over the base line) can really benefit from having DLSS 2 turned on. It’s an awesome tech as I’ve said in the past, and I find myself going with Quality mode even if I am running pretty well at native resolution.

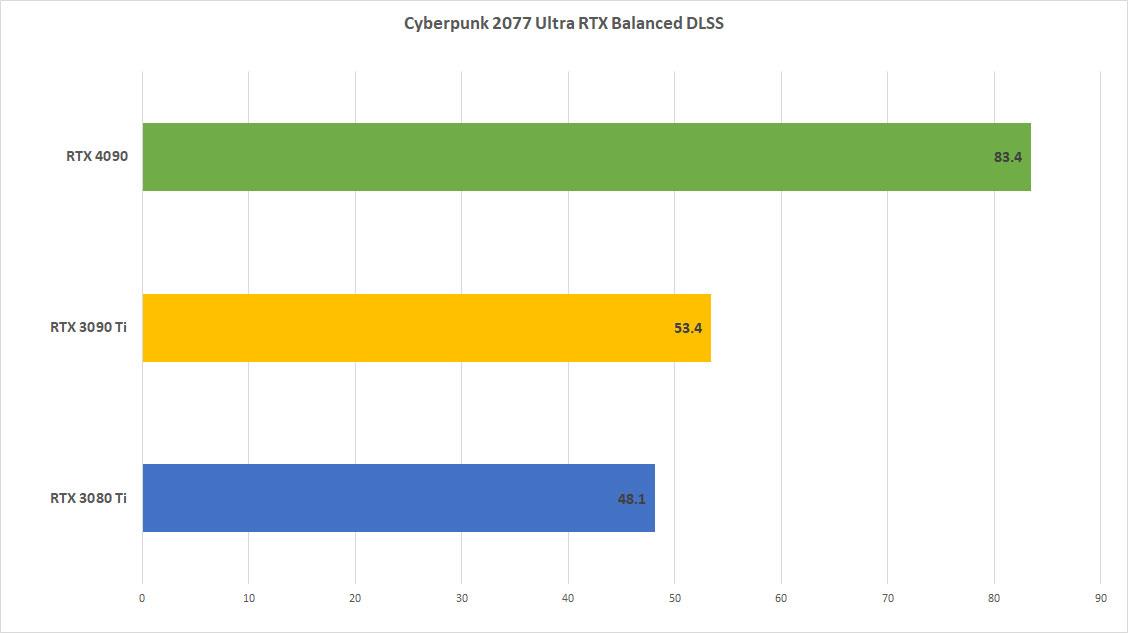

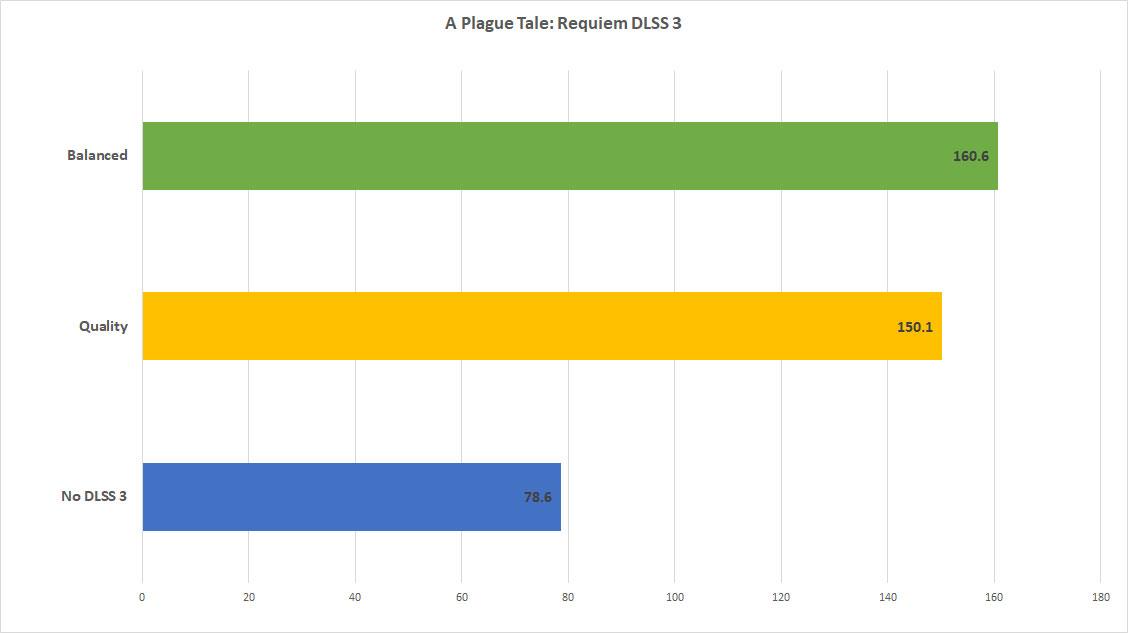

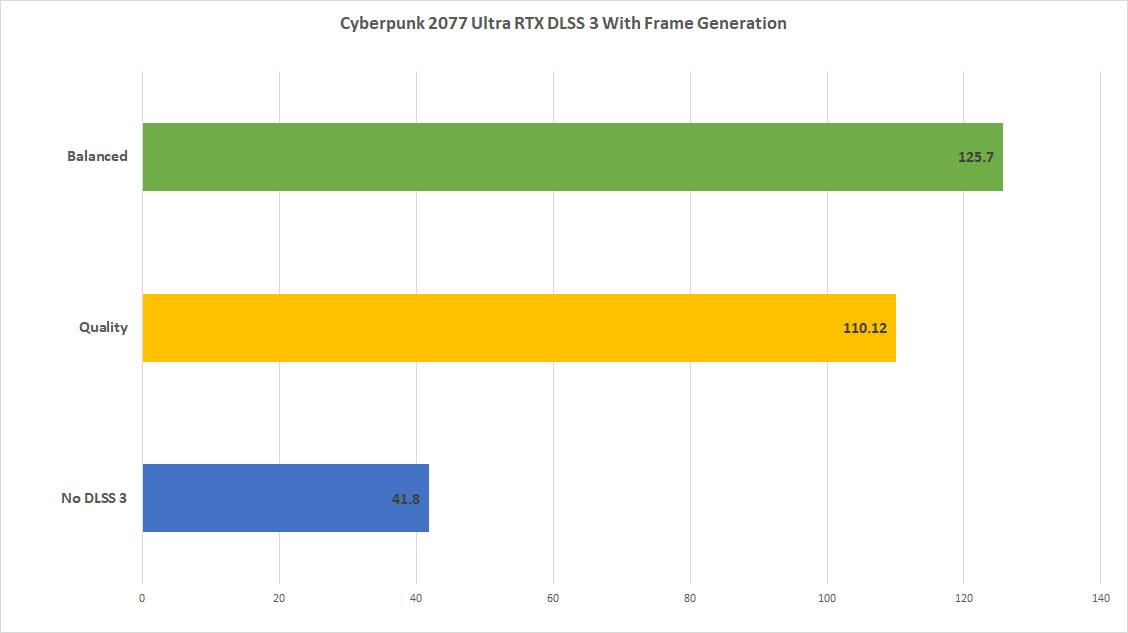

Now let’s go through some DLSS 3 numbers. There were a few titles made available that had DLSS 3 implementations and I chose to test Cyberpunk 2077, Microsoft Flight Simulator, and A Plague Tale: Requiem. Frame generation is the thing that really pushes DLSS 3, and it works in conjunction with DLSS Super Resolution and NVIDIA Reflex to deliver a more performant experience as well as decrease latency that is introduced by Frame Generation and sometimes reduce power consumption.

Yes, you read that correctly. When I was running games on the RTX 4090 in DLSS 2 and 3, there were times when my UPS, which showed me the power draw of the computer and monitor, was less than when it was running without DLSS enabled. That’s also backed up by MSI Afterburner and NVIDIA Frameview showing that power consumption while I was benchmarking to be less than without using DLSS.

I didn’t see this when testing DLSS 2 with the RTX 3080 Ti or the RTX 3090 Ti. Power draw was pretty consistent on those two cards whether DLSS 2 was enabled or not. But not on the RTX 4090. With DLSS 2 or 3 enabled, it wasn’t uncommon to see my computer reduce power usage while still delivering good picture quality in Quality and Balanced mode AND increased frame rate due to Frame Generation. I was seeing reductions by as much as 100W when using DLSS with various games' power draw fluctuating instead of staying consistently high. For example, Control was fluctuating between 360W and 390W of power when using DLSS. A Plague Tale: Requiem would run around 412W to 416W consistently, but once I turned on DLSS, the power draw went down to around 364W. I think that’s a pretty great feat of engineering to be able to not only provide some great uplift, but to also reduce the amount of power the card draws at maybe a slight cost in image quality in some situations and improvement in image quality in others.

Frame generation is the controversial part of DLSS 3 and I think it’ll take some time before the public and press really get a good handle on it and whether it has any issues with gameplay and picture quality. I, for one, couldn’t see anything weird in my play throughs with DLSS 3 on. In some games I’d see things that caught my eye with DLSS 2 enabled, like shimmering textures and missing shadows, but any visual anomalies produced by Frame Generation weren't visible to my naked eye when playing. Digital Foundry does show some examples of occlusion issues in their piece on DLSS 3 with Marvel’s Spider-Man and I’m sure it does happen here and there in the games I tested, but I couldn’t tell you I saw it in real time. And maybe that’s OK in the sense that if you don’t notice it and it’s producing better performance without hindering latency, then it’s something to turn on and not worry about what it’s doing.

I asked NVIDIA what their response is to people who say Frame Generation produces fake frames in a game. They said, “The goal of a high frame rate is to improve the user’s perception of how smoothly a rendered scene is seen by the eye. By increasing the number of frames output to the display, the distance pixels travel from one frame to the next is decreased thus resulting in a smoother perception. Additionally, by generating frames there are more GPU resources available to add ray-tracing and higher quality graphics, resulting in more beautiful and detailed frames which, again, improves a user’s perception of the rendered scene. The frame generation algorithm takes two rendered frames and generates a new frame that properly transitions between the rendered frames resulting in an increased frame rate with smoother visuals that are significantly more pleasing to the eye.”

With latency, there was some fear that the Frame Generation aspect would make it seem a little off. Before I even did any numbers testing, I just played Cyberpunk 2077 with both DLSS 3 off and on. In all honesty, I couldn’t tell the difference. I ran around the city, fired my guns, had some shootouts, and I didn’t notice any lag at all with Frame Generation on. I made sure to not even have the numbers on my screen while playing and I was really happy with what I saw and felt.

DLSS 3 is where NVIDIA gets the 2-4X performance over the RTX 3090 Ti and I think it’s taking into consideration that you are using Performance DLSS Super Resolution. I didn’t use Performance, but you can still see the massive gains from no DLSS on the RTX 3090 Ti to using DLSS 3 on the RTX 4090 Ti in Quality and Balanced modes. Especially in a title like Microsoft Flight Simulator, DLSS 3 almost doubles the performance. Cyberpunk 2077 with RTX at high settings runs phenomenally in 4K. The RTX 4090 can produce frames while waiting on the CPU, hence the increased performance over the likes of rasterization and DLSS 2 in games like Microsoft Flight Simulator. Oh, and yes I did try to see if DLSS 3 would work in Microsoft Flight Simulator in VR. Unfortunately, it looks like as soon as you turn on VR mode, it drops down in performance and while NVIDIA's Frameview couldn't display the performance I was getting as the counter in the upper part of my screen was illegible, I wasn't experiencing smooth performance constantly when moving my head around. But man, if NVIDIA ever gets it to work in VR, that would help playing this game out immensely in that mode.

For those that worry about DLSS 2 being left behind, DLSS 3 is built on top of DLSS 2. And from what I’ve seen in the three games I was able to test, there’s an option to turn off Frame Generation if you don’t want to use it, which makes it essentially DLSS 2. Hopefully, all the other developers follow suit and let you choose to have it enabled or disabled. If you don’t have an Ada Lovelace card, the Frame Generation toggle is disabled while the other options remain available.

I can see why NVIDIA’s really pushing DLSS 3. Well, besides it being exclusive to their line of cards, it does produce tangible benefits. Cyberpunk 2077, A Plague Tale: Requiem, and Microsoft Flight Simulator are “slower” paced games so it’ll be interesting to see how this does in say more fast twitch games. Over 35 games and apps will have DLSS 3 available in the near future so I’m excited to see how NVIDIA improves Frame Generation over time, similar to the way DLSS has improved greatly from its introduction to now. On October 17th, Xbox Insiders can get access to the beta for Microsoft Flight Simulator with DLSS 3 support. A Plague Tale: Requiem will launch with DLSS 3 support.

Content creators will be happy to hear about the inclusion of AV1 hardware encoding in the RTX 4090. AV1 produces great quality at bitrates comparable to H.264 videos. That means streamers can show off higher quality streams without using as much bandwidth. The RTX 4090 has dual AV1 encoders and can support up to 8K60 recordings with export speeds up to twice as fast. I use the NVENC encoders on older cards for things like real time transcoding and making my recordings smaller without losing as much quality when the files are reduced and it’s great to see the GeForce RTX 4090 include AV1 encoders to add another codec to their lineup of supported codecs.

The Geforce RTX 4090 will be out tomorrow for purchase. It’s a performance beast and doesn’t use as much power as people initially thought it would. DLSS 3 is pretty awesome so far and as more titles get support and time goes on, we’ll have a better grasp on whether it’s a solid gaming tech to use while playing like DLSS 2 was. Coming in at $1599, it’s $500 more than the GeForce RTX 3090 Ti currently, but you can see it’s got a big performance leap over the previous generation. It can also be said it’s only coming in at $100 more than the release price of the GeForce RTX 3090. But, it is a lot of money to drop on a video card, no two ways about it. If you are going to game on 4K at high refresh rates, you’ll want this card to push today and future games at high settings. Hopefully, availability should be plenty now and those that do want one can pick one up easily at or near launch.

The most powerful video card right now, the GeForce RTX 4090 delivers incredible performance for 4K gaming and DLSS 3 looks like it delivers some great uplift in titles that support it.

Rating: 9 Class Leading

* The product in this article was sent to us by the developer/company.

About Author

I've been reviewing products since 1997 and started out at Gaming Nexus. As one of the original writers, I was tapped to do action games and hardware. Nowadays, I work with a great group of folks on here to bring to you news and reviews on all things PC and consoles.

As for what I enjoy, I love action and survival games. I'm more of a PC gamer now than I used to be, but still enjoy the occasional console fair. Lately, I've been really playing a ton of retro games after building an arcade cabinet for myself and the kids. There's some old games I love to revisit and the cabinet really does a great job at bringing back that nostalgic feeling of going to the arcade.

View Profile